About

I enjoy exploring challenging problems and ideas at the intersection of Mathematics and Computer Science. Research is central to how I work and think, and I’m especially drawn to questions where intuition is built gradually through both reasoning and experimentation. I value environments where curiosity matters, precise questions are encouraged, and progress comes from sitting with uncertainty rather than rushing to answers.

Much of my current work focuses on large language models and representation learning, where I study how models behave under realistic constraints and imperfect data. I am currently preparing a research submission for ICML 2026 that examines how architectural design choices in large language models shape learned representations and enable efficient adaptation across tasks.

Research & Publications

Algoverse — Research Fellow (Publication in Progress)

Aug 2025 – Present- • Designed LoRA adapter experiments for cross-modal retrieval, comparing two-hop routing through a shared latent space against direct encoding baselines using the Perception Encoder.

- • Evaluated adapter routing on COCO image–text retrieval, demonstrating competitive performance while enabling flexible cross-modal transfer.

- • Contributing experimental results to an ICML 2026 submission, "Harnessing Universal Embedding Geometry," investigating representational convergence across vision–language architectures.

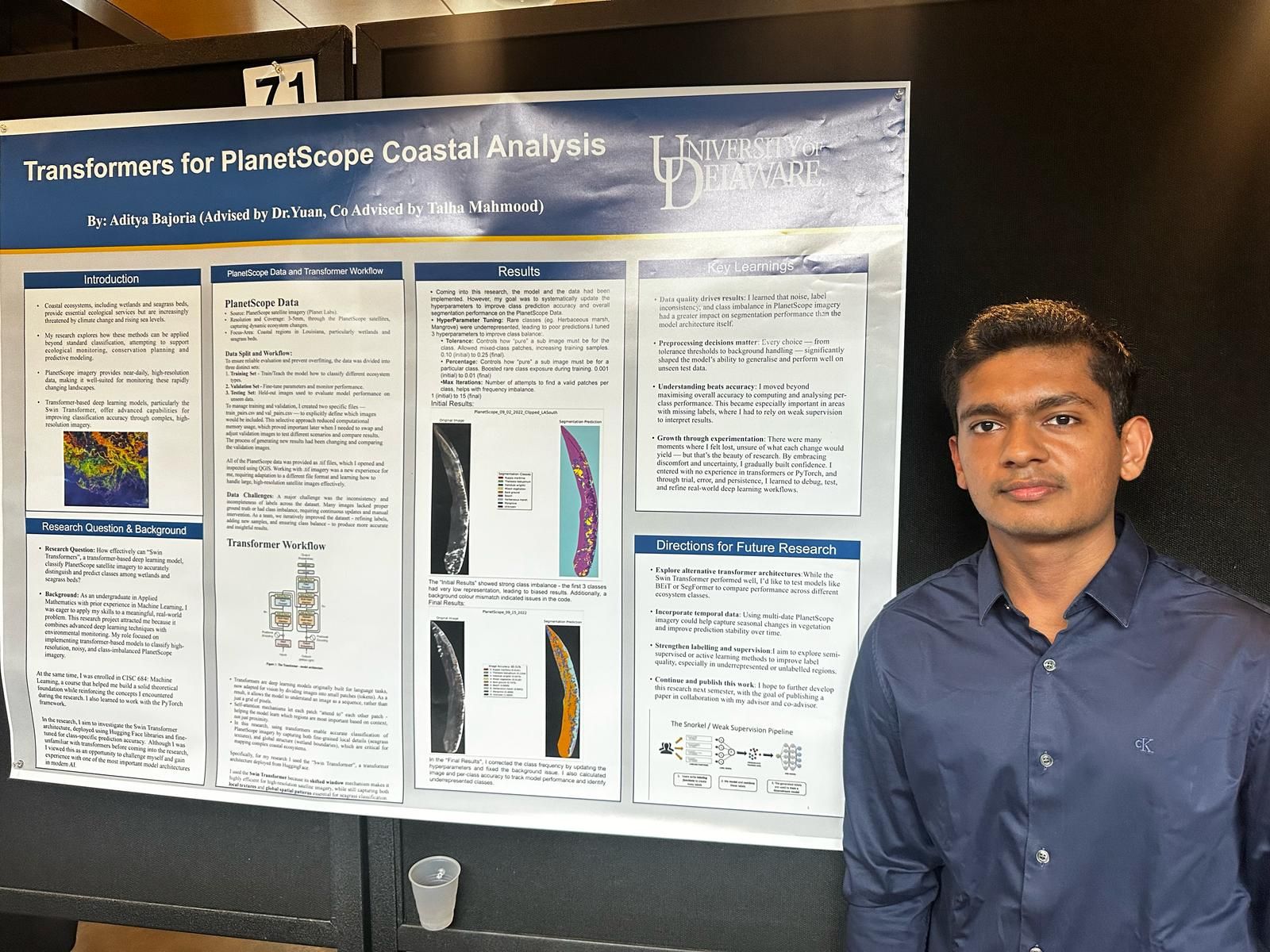

PlanetScope CASE Lab — Undergraduate Researcher

Jun 2025 – Present- • Applied Swin-Transformer architectures for semantic segmentation of Louisiana’s coastal wetlands, optimizing batch inference for high-dimensional multispectral imagery.

- • Engineered a custom PyTorch pipeline with class-weighted loss, extensive augmentation tuning, and optimized inference for high-resolution coastal tiles, achieving 19% improvement in minority-class accuracy.

- • Benchmarked Swin Transformer against DeepLabV3 and SegFormer, establishing baseline performance metrics for coastal wetland segmentation.

Experience

- • Developed scalable React.js applications with Redux state management, optimizing component structure and client-side logic to reduce interface load time by 25%.

- • Improved deployment reliability by automating CI/CD workflows using modular front-end architecture for high-traffic production environments.

- • Designed and implemented responsive UI systems using Tailwind CSS and Bootstrap, ensuring cross-platform consistency across global client campaigns.

- • Implemented 20+ domain-specific embedding models in Python and developed SQL pipelines for efficient retrieval across large enterprise datasets.

- • Built and evaluated supervised learning models, improving predictive accuracy by 30% through feature engineering and preprocessing.

- • Integrated OpenAI and LLM APIs into internal tools, applying prompt-engineering strategies to automate documentation workflows.

Projects

Neural Proof Assistant

Built a weak-supervision pipeline to classify mathematical proof techniques by learning structured representations from unlabeled proof text. Designed Snorkel-style labeling functions to generate training signals, analyzed and resolved conflicting heuristics, and trained embedding-based classifiers that produce confidence-aware predictions in an end-to-end ML system.

Pitch Miles

Developed a sports analytics system to quantify how travel distance and rest days affect away-team performance. Built data pipelines across multiple seasons, modeled performance while controlling for opponent strength, and communicated results through interactive visualizations for comparative analysis.

Contact

I'd Love To Connect With You.

Send a message and I’ll respond as soon as I can.